A Plain English Guide to AI Language Models

Discover how AI language models work in simple terms. This guide explains everything from core concepts to real-world business applications.

At their core, AI language models are powerful systems trained on staggering amounts of text, all to master one fundamental skill: predicting the next word in a sentence. This simple-sounding ability is what lets them generate incredibly human-like text, answer complex questions, and handle a whole range of language tasks.

Think of them as statistical pattern recognizers, not conscious minds.

What Are AI Language Models Anyway?

Imagine someone who has read every single book, article, and website on the internet. They have absorbed the connections between words, the natural flow of sentences, and the underlying structure of different ideas. That's a pretty good way to think about AI language models.

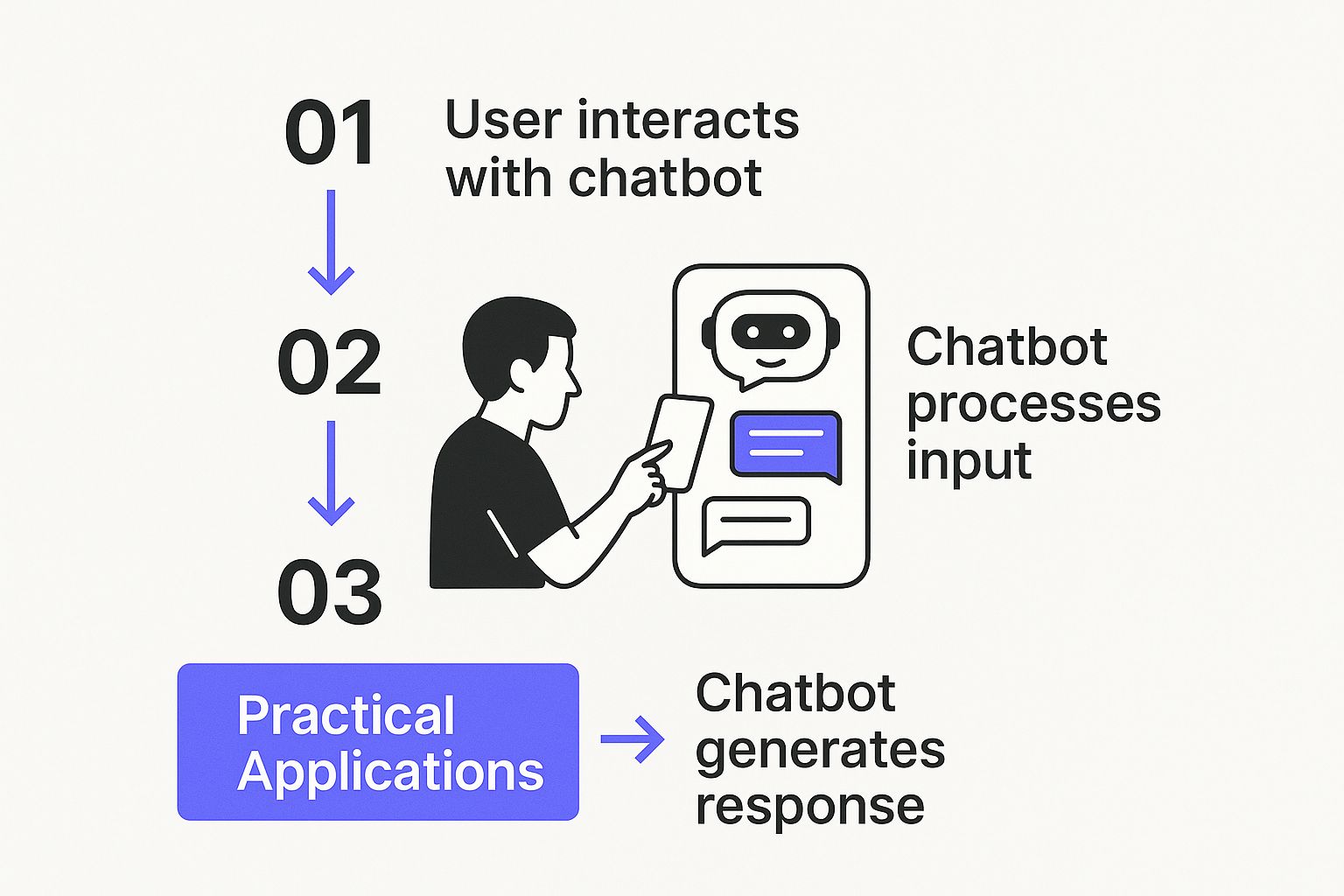

These systems aren't "thinking" like we do. They're masters of probability. When you give a model a prompt, it just calculates the most likely word to come next based on the trillions of examples it's already seen.

It then repeats that process, word by word, to build out sentences and paragraphs. This simple, repetitive prediction is what allows them to write essays, summarize documents, and even generate computer code.

The Foundation of Modern AI Communication

The first step in creating a language model is a phase called pre-training, where the goal is to develop a general knowledge of language. It learns grammar, context, facts, and even different reasoning styles by analyzing massive datasets, usually scraped from the public internet. This process is unsupervised, meaning it learns these patterns on its own without specific instructions.

The paradox of large language models is that you have to write for them in order to get them to write for you. If you write a prompt that is vague, ambiguous, disorganized, and unfocused, the model will give you output with those same characteristics.

This foundational knowledge makes the model incredibly versatile. From there, it can be fine-tuned for more specific jobs. This specialization is what transforms a generalist model into a powerful tool for particular tasks, including:

- Content Creation: Drafting articles, social media posts, and marketing copy.

- Customer Support: Powering chatbots that can answer frequently asked questions.

- Data Analysis: Summarizing long reports or identifying trends in text data.

This capability is a core component of many modern AI systems. For a closer look into how these models are applied in interactive systems, you can learn more about what conversational AI is and how it works.

Ultimately, a model’s ability to communicate comes from recognizing statistical relationships in language, not from any true awareness of the world. Recognizing this distinction is the key to using these powerful tools effectively.

The Architectural Leap That Changed Everything

The journey of AI language models from simple text predictors to the sophisticated tools we use today wasn't a slow crawl. It was a jump, a single, powerful innovation that changed the game.

For a long time, models like Recurrent Neural Networks (RNNs) and their cousins, LSTMs, processed language one word at a time, in sequence. This worked okay for short sentences, but it created a massive bottleneck for anything longer.

Imagine trying to read a long novel one word at a time, hoping you'll remember the main character's name from chapter one by the time you hit the final page. That’s pretty much the challenge these early models faced. Information from the beginning of a text would get lost or diluted by the end, making it nearly impossible to get the bigger picture.

This meant complex tasks were off the table. The models couldn't reliably connect a pronoun to a subject from a few sentences back or maintain a consistent topic over a long article. Something had to change.

The Arrival of the Transformer Architecture

That game-changing moment arrived in 2017 with a paper introducing the Transformer architecture. Instead of plodding through words one by one, the Transformer could look at an entire sentence all at once. This parallel processing was faster and allowed the model to weigh the importance of every word in relation to all the others.

The secret sauce behind this is something called the attention mechanism.

Think of it as the model’s ability to focus. When you read the sentence, "The cat, which had been hiding under the table all day, finally came out to eat its food," your brain instantly links "its" to "cat," even with all those words in between. The attention mechanism gives AI that same superpower, letting it zero in on the most relevant words for context, no matter where they are in the text.

The Transformer fundamentally changed how models "see" language. Instead of a linear sequence, they could now perceive a web of interconnected relationships between words, unlocking a far greater level of contextual awareness.

This breakthrough completely solved the long-term memory problem that held back older models. Suddenly, AI could handle long, complex text, paving the way for the incredibly powerful systems we rely on today.

Building Blocks of Modern AI Models

This architectural leap led directly to the development of landmark models like BERT (Bidirectional Encoder Representations from Transformers) and the GPT (Generative Pre-trained Transformer) series. While they use the Transformer in slightly different ways, they both stand on its shoulders.

- BERT's Approach: BERT is designed to get context from both directions at once (left-to-right and right-to-left). This makes it exceptionally good at tasks that demand a full picture of a whole sentence, like translation or answering questions.

- GPT's Approach: The GPT family is built to generate text by predicting the next word in a sequence. Its architecture is fine-tuned for creating coherent, creative, and relevant content, from simple emails to detailed essays.

The wild success of these models has lit a fire under AI development. The global market for large language models, estimated at USD 8.59 billion, is now projected to hit USD 67.69 billion by 2032, growing at a compound annual rate of 34.3%.

This explosive growth is a direct result of the power unlocked by the Transformer. You can read more about the growth of the large language model market and its drivers. This was more than an improvement; it was the start of an entirely new class of tools.

How an AI Model Goes from Knowing Nothing to Writing Code

An AI language model starts out as a blank slate, just a massive digital network of connections, completely empty of grammar, facts, or any sense of context. The journey from that empty state to a tool that can write poetry or debug code is a fascinating process of learning at an almost unimaginable scale.

It’s a multi-stage process, designed to first build a broad, general foundation of knowledge before sharpening it for very specific jobs.

Stage 1: Pre-Training (AKA "Read the Entire Internet")

The journey begins with pre-training. Think of this as the model's general education, where it’s exposed to a gigantic library of public text and code scraped from the internet. In this phase, its only job is to do one thing over and over: predict the next word in a sentence.

By doing this trillions of times, it starts to absorb the statistical patterns of language. This is how the model learns the fundamentals on its own. It picks up grammar rules, common phrases, and factual connections just by observing how words appear together. It doesn't "know" that Paris is a capital city, but it learns that after the words "The capital of France is," the word "Paris" is extremely likely to follow.

A good way to picture this is to think of a chef in training. Pre-training is like the chef spending years learning every cooking skill imaginable: knife work, sauce making, baking, butchery, you name it. They aren't an expert in any single cuisine yet, but they have a powerful foundation that can be applied to almost anything.

The whole point of pre-training is to build a generalized map of the world as it's represented in text. The model develops a rich, internal picture of how words, concepts, and ideas relate to one another, making it a flexible base for more advanced skills.

This first step is incredibly resource-intensive, demanding huge amounts of computing power and colossal datasets. The quality of that data is everything; a model trained on a diverse, high-quality collection of text will have a much stronger starting point.

Stage 2: Fine-Tuning (AKA "Teach it a Specific Job")

After pre-training, the model has a general facility with language, but it isn't really built for any specific task. It’s like a super-powered autocomplete. That’s where the second stage comes in: fine-tuning.

Fine-tuning is all about customizing the pre-trained model for a particular role, like answering customer support tickets, summarizing legal documents, or generating marketing copy.

Let's go back to our chef. Fine-tuning is when that chef decides to specialize in, say, Italian or Japanese cuisine. They take all their general knowledge and apply it to a focused set of recipes and techniques, learning the specific nuances needed to become an expert in that one area.

This is done by training the model on a much smaller, hand-picked dataset designed for the job. For instance, to create a helpful chatbot, you'd fine-tune a model on thousands of high-quality question-and-answer pairs. This teaches the model the specific style and format of a helpful, conversational assistant.

To bring this all together, we've created a simple table that breaks down these two core training stages.

Comparison of Language Model Training Approaches

This table shows the clear progression: start broad, then get specific. It’s this two-step process that makes modern AI so powerful.

Ultimately, fine-tuning is what transforms a general-purpose language model into a true specialist.

This combination of broad learning followed by focused specialization is the secret sauce behind today's most capable AI tools. It allows companies to create powerful, task-specific models without having to train a new one from scratch every time, which would be astronomically expensive and slow.

This approach has made building custom AI agents more accessible than ever. To see how this works in practice, check out our guide on how to build AI agents.

Putting AI Language Models to Work in Business

This is where the real value of AI language models clicks into place, when they stop being abstract ideas and start solving actual business problems. Across just about every industry, these systems are being used to automate tedious work, solve stubborn challenges, and unlock entirely new ways of operating. They’re no longer just for tech giants; they’re accessible tools that companies of all sizes can use to get ahead.

From customer service to creative work, the applications are as diverse as they are impactful. Businesses are discovering that language models can take on tasks that once soaked up huge amounts of human effort, freeing up teams to focus on strategy and bigger-picture thinking. The key is to find the specific bottlenecks where language is the answer.

Market trends confirm this isn't just hype. Large language models are now a foundational technology, with the global market expected to blow past USD 82 billion by 2033. Adoption is already widespread, with 67% of organizations integrating these models into their workflows. Retail and e-commerce are leading the charge, holding 27.5% of the market share by using AI for customer engagement and personalized marketing. You can dig deeper into these trends and discover more insights about LLM statistics on Hostinger.

Revolutionizing Customer Interactions

One of the most visible impacts of AI language models is in customer service. We've all dealt with old-school chatbots that could only follow rigid scripts. They’d get stuck on the slightest unexpected question, leading to dead ends and frustrated customers.

Today’s AI-powered chatbots are a completely different breed. When you train them on your company’s product manuals, FAQs, and support history, they can figure out the nuances of a customer’s problem and give genuinely helpful, conversational answers. This means they can handle a much wider range of issues, from simple order tracking to more complex troubleshooting.

Here’s how they make a real difference:

- 24/7 Availability: AI assistants don’t sleep. They provide instant support to customers in any time zone without needing a human on standby.

- Instant Resolutions: For common questions, customers get answers immediately, which boosts satisfaction and lightens the load on human support teams.

- Scalability: An AI chatbot can handle thousands of conversations at once, something a human team could never manage during a sudden surge in demand.

This automation frees up human agents to focus on high-stakes, sensitive, or unusually difficult problems that require a human touch. The result is a more efficient support system and a much better customer experience.

Accelerating Content Creation and Marketing

Content is the engine of modern marketing, but consistently producing high-quality articles, social media posts, and emails is a massive challenge. AI language models are becoming powerful assistants for marketing teams, helping them break through writer's block and scale their output.

A content creator can use an AI model to brainstorm blog post ideas, generate an outline, or even draft the first version of an article. The AI does the initial heavy lifting, and the human writer steps in to refine, edit, and add their unique perspective. This partnership dramatically speeds up the entire content lifecycle.

By automating the initial drafting process, AI language models allow creators to spend less time staring at a blank page and more time on strategic thinking, editing, and making sure the final product resonates with their audience.

This same idea applies across other marketing activities. Models can generate dozens of variations for ad copy, social media posts, or email subject lines, letting teams A/B test to find what works best. It’s a data-driven approach that helps optimize campaigns for better engagement and conversions. For a practical look at this, exploring strategies for overcoming language barriers with AI shows how these models work in global business.

Supporting Software Development and Analysis

The impact of AI language models goes deep into technical fields like software development. At its core, writing code is a language-based task, and models trained on huge repositories of open-source code have become exceptionally good at it.

Developers are now using AI-powered tools to:

- Generate Code Snippets: A developer can describe a function in plain English, and the AI will generate the corresponding code in a specific programming language.

- Debug and Explain Code: If a piece of code isn’t working, a developer can paste it into an AI tool and ask for an explanation or a potential fix.

- Translate Between Languages: AI can help convert code from one programming language to another, saving developers hours of manual rewriting.

These tools aren’t replacing developers. They’re acting as an intelligent "pair programmer," handling repetitive tasks and helping developers solve problems faster. This lets them spend more time on system architecture and creative problem-solving, the most valuable parts of their job. By accelerating development cycles, businesses can bring new products and features to market much more quickly.

The Reality of Model Limits and Ethical Questions

AI language models can do some incredible things, but it’s important to look past the hype. They aren't magic. These systems are powerful tools, but they come with significant limitations and raise some serious ethical questions that we can't ignore.

To use them well, you have to know their weaknesses just as much as their strengths.

One of the biggest issues you’ll run into is something called "hallucinations." It’s a strange term, but it’s what happens when a model generates information that is factually incorrect, nonsensical, or completely made up.

Because a model’s main goal is to predict the next most likely word in a sequence, it can confidently string together plausible-sounding sentences that have zero basis in reality. This is a huge risk if you take its output at face value without checking the facts.

This isn’t just a minor glitch; it’s a core challenge baked into how these models operate. They are expert pattern-matchers, not truth-seekers.

Biases Baked into the Data

A more subtle but equally serious problem is algorithmic bias. AI models learn from enormous amounts of text scraped from the internet, and unfortunately, that text contains the full spectrum of human biases, stereotypes, and prejudices.

As a result, models can accidentally learn and reproduce these harmful patterns. A model might associate certain jobs with specific genders or ethnicities simply because that’s what it saw most often in its training data. When these biased models are used in real-world applications like hiring tools, the outcomes can be deeply unfair.

The outputs of an AI language model are a direct reflection of its training data. If the data is biased, the model will be biased. Fixing this requires careful data curation and ongoing work to detect and filter out these learned prejudices.

This challenge has kicked off a major push for responsible AI development. Researchers are working hard to create more balanced datasets and build techniques to make models more fair and transparent. For a closer look at how different models tackle safety, you can check out our analysis of AI models like Claude and their commitment to ethical standards.

The Hidden Costs of AI

The sheer scale of modern language models also comes with some hefty costs that often fly under the radar, both financially and environmentally.

- Financial Investment: Training a state-of-the-art model requires a massive amount of specialized computing hardware. We’re talking costs that can run into the hundreds of millions of dollars, which concentrates a lot of power in the hands of a few big tech companies.

- Environmental Impact: The data centers that power AI training consume staggering amounts of electricity and water for cooling. The carbon footprint of a single training run can be equivalent to hundreds of transatlantic flights.

These costs raise important questions about sustainability and who really gets to participate in building the future of AI.

The growth of the large language model market brings these tensions into sharp focus. The market hit an estimated USD 5.72 billion and is projected to explode to over USD 123 billion by 2034, growing at a compound annual rate of 35.92%. While this boom drives innovation, it also magnifies the challenges of ethical use and a widening skills gap. You can dig into the specifics in the full report on the large language model market.

Ultimately, you can’t get the full picture of AI without knowing its limits. Acknowledging hallucinations, biases, and hidden costs is the first step toward using this technology in a way that’s genuinely helpful and responsible.

A Few Common Questions About AI Language Models

As we've journeyed through this topic, a few questions tend to pop up again and again. This technology is moving so fast that it's easy to get tangled in the terminology or wonder how all the pieces really fit together.

Let’s clear up a few of the most common points of confusion. Think of this as a quick-reference guide to help solidify your thoughts and give you a practical starting point.

How Do AI, Machine Learning, and Language Models Fit Together?

It's easy to get these terms mixed up, but their relationship is actually pretty simple. The easiest way to picture it is like a set of nesting dolls, where each concept fits neatly inside the next.

- Artificial Intelligence (AI) is the biggest doll. It's the whole grand idea of creating machines that can handle tasks that usually require human intelligence, things like solving problems, learning from experience, or making decisions.

- Machine Learning (ML) is the next doll down. It's a specific field within AI focused on building systems that learn directly from data. Instead of being programmed with a bunch of rules, these systems get better on their own by identifying patterns.

- AI Language Models are an even smaller doll inside machine learning. They're a specific application of ML, designed for one job: processing, understanding, and generating human language. They build this skill by analyzing absolutely massive amounts of text.

So, every language model is a product of machine learning, and all machine learning is a form of AI. It’s a hierarchy, with one field building on the next.

Do These Models Truly Understand Language?

This is a deep and fascinating question, and the short answer is no, at least, not in the way you and I do. When we talk about language, we're connecting words to real-world experiences, emotions, and intentions. An AI model doesn't have any of that.

What it does have is an incredibly sophisticated ability to recognize and replicate statistical patterns in text. It has learned the mathematical relationships between words on a mind-boggling scale. When you ask it a question, it isn’t "knowing" the answer. It’s calculating the most probable sequence of words that should come next, based on everything it’s ever seen in its training data.

It’s like the difference between a parrot that can perfectly mimic human speech and a person who actually understands the meaning behind the words. The model is an expert mimic, but it lacks genuine comprehension, consciousness, or intent.

Keeping this distinction in mind is key to using these tools well. It helps you anticipate their limitations (like their tendency to confidently make things up) while still appreciating their strengths as powerful pattern-matching engines.

How Can a Small Business Start Using This Technology?

Getting started with AI language models is more accessible than you might think. You don’t need a team of data scientists or a huge budget to start experimenting. The easiest way in is through platforms and APIs that handle all the complicated infrastructure for you.

Here are a few practical first steps for any small business looking to dip a toe in the water:

- Identify a specific problem. Don't start with "we need AI." Start with a real bottleneck. Is your team buried under repetitive customer questions? Are you struggling to create marketing content consistently? Find the pain point first.

- Explore user-friendly platforms. Many tools now offer no-code or low-code solutions that let you build your own AI assistants. Platforms like Chatiant let you train a chatbot on your own website content or internal documents in just a few minutes.

- Start small and test. Kick things off with a low-stakes project. For example, build an internal chatbot to help your team find information in company handbooks. This lets you learn how the tech works and see its value before you point it at your customers.

The barrier to entry has dropped dramatically. With simple integrations and APIs, you can plug the power of AI language models into your existing tools without having to build anything from scratch. This opens the door for even the smallest teams to start automating tasks and working smarter.

Ready to put AI to work for your business? With Chatiant, you can build a custom AI agent trained on your own data in minutes. Create a powerful chatbot for your website, automate customer support, or build an internal helpdesk assistant. It's the simplest way to get started with practical AI. Learn more and build your first agent with Chatiant.