What Is RAG and How Does It Work?

Curious what is RAG? Learn how Retrieval-Augmented Generation makes AI models smarter and more reliable by connecting them to real-time information.

Think about the last time you used an AI chatbot. Did you wonder where it gets its answers? Most Large Language Models (LLMs) operate like a student in a closed-book exam. They can only use the information they were trained on, which can quickly become outdated.

But what if you could give that student an open book? That's the simple idea behind Retrieval-Augmented Generation (RAG). It is a method that lets an LLM look up fresh, relevant information from an external source before it even tries to answer your question.

Breaking Down Retrieval-Augmented Generation

Standard LLMs are stuck with what they know. If their training data is a year old, they can't tell you about anything that happened yesterday. This is where RAG comes in. A RAG system first "retrieves" key facts from a trusted knowledge base, like your company's internal documents or a live product database.

This one step makes a world of difference. It dramatically reduces the risk of the AI making things up, a problem known as "hallucination." Instead of giving a generic response, a RAG-powered chatbot can pull the latest product specs or recent policy changes to give a customer a precise, accurate answer.

Why RAG Is Gaining Momentum

The business world is catching on fast. The global RAG market was valued at around USD 1.3 billion in 2023 and is on a steep upward curve. Projections show it rocketing at a growth rate between 40.3% and 49.1% annually, with some estimates putting its value over USD 67 billion by 2034.

This growth shows how important RAG is for making AI genuinely useful for businesses. If you're looking for a good explanation of its functions, you can find a great explanation of what Retrieval-Augmented Generation is and its benefits.

RAG bridges the gap between the broad, general knowledge of an LLM and the specific, up-to-the-minute information a business needs. It makes AI models both smarter and more accountable.

Before we go further, let's quickly compare a standard LLM with one that's been improved with RAG. The table below breaks down the key differences in how they work.

Standard LLM vs RAG-Powered LLM at a Glance

As you can see, RAG fundamentally changes how the model generates answers, making it far more reliable for real-world applications.

So, what are the biggest takeaways? The key benefits really boil down to this:

- Improved Accuracy: Answers are based on verified, current data, not just the model's memory.

- Greater Trust: By citing its sources, RAG lets users see where the information came from, building credibility.

- More Relevance: The system uses the most current information available, making it perfect for dynamic environments like customer support or sales.

These improvements are especially valuable for tools that businesses rely on every day, like advanced AI chatbots for customer support and intelligent research assistants.

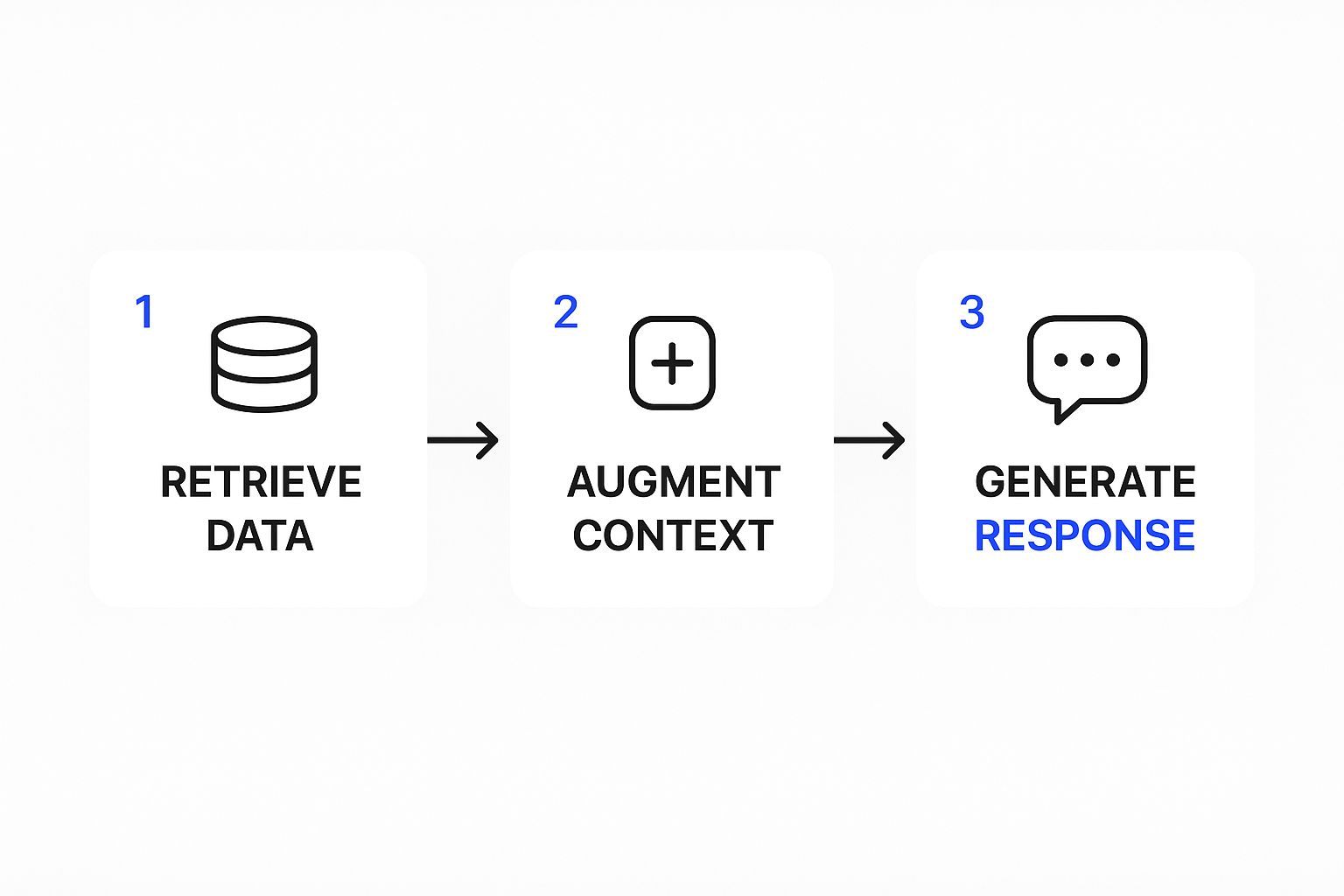

How the RAG Process Functions Step-by-Step

So, how does a RAG system actually work? Let's follow a user's question from start to finish to see it in action. Think of it as a simple, three-part workflow that turns a basic query into a well-researched, detailed response. It’s a smart way to avoid the "closed-book" problem of standard LLMs by actively looking for answers first.

This diagram shows the powerful sequence of events happening inside a RAG system.

As you can see, the system builds a much better, context-aware prompt before the AI even starts to formulate its answer.

The Retrieval Phase

The moment a user submits a question, the process kicks off. Instead of jumping straight to an answer, the system first acts like a research assistant. It takes the user's question and scours a specific knowledge base. This could be anything from a company's internal wiki and product docs to a database of help center articles.

The goal here is simple: find snippets of text that are highly relevant to the question. This is the retrieval phase. It uses sophisticated search techniques to pinpoint the most useful information from what could be a massive library of content.

The Augmentation and Generation Phases

Once the system has retrieved the best information it can find, the augmentation phase begins. This is where the process gets interesting. The system takes the original user question and combines it with the factual data it just found, creating a new, enriched prompt that gives the Large Language Model (LLM) all the context it needs to succeed.

This augmented prompt is the secret sauce of RAG. It's like handing a speaker a set of detailed notes right before they go on stage, making their talk accurate, specific, and on-point.

Finally, we get to the generation phase. The LLM receives this context-heavy prompt and uses it to construct a precise, fact-based response. Because the answer is grounded in the retrieved documents, it's far more reliable and much less likely to "hallucinate" or make things up. The model can even cite its sources, showing you exactly where it got its facts.

The Big Wins: Why RAG is a Game-Changer for AI

The move to RAG is about the clear and immediate value it brings to a business. When you ground your AI in verifiable facts, you get systems that are not just smarter, but far more dependable.

The most obvious win is a huge boost in accuracy and a massive drop in AI "hallucinations," those moments when a model just makes things up.

Instead of guessing, a RAG system pulls answers directly from a source you trust. This makes it a perfect fit for any situation that demands precision. Imagine a support bot that can instantly reference a technical manual published this morning to solve a customer's issue. That’s a level of real-time accuracy a standard LLM, with its fixed knowledge cutoff, just can't touch.

Building Trust Through Transparency

One of the biggest hurdles with AI has always been its "black box" problem. You get an answer, but you have no idea where it came from or why you should trust it. RAG flips this on its head by design.

Since the model retrieves information from a specific document or data source, it can actually cite its sources. It can show you the receipts.

This is the foundation of user trust. When a user can click a link and see the original document for themselves, they gain real confidence in the AI's answer. This is important in high-stakes fields where verifying information is a must, not a nice-to-have. For businesses, this kind of transparency is key to delivering quality customer support automation that people actually want to use.

By providing a verifiable data trail, RAG transforms AI from a mysterious oracle into a trustworthy assistant. This accountability is a game-changer for enterprise adoption.

Access to Real-Time, Up-to-the-Minute Information

The real world moves fast, and so does business data. Standard models are basically frozen in time, stuck with whatever information they were trained on months or even years ago.

RAG systems, on the other hand, can connect to live data sources, giving them a massive advantage. This makes sure the AI is always working with the most current information available.

Think about what this unlocks:

- Financial Analysis: An AI assistant can pull the latest market data to give you an analysis that reflects what's happening right now, not last quarter.

- Internal Support: An HR bot for employees can access the very latest company policies or IT security guides the moment they're updated.

- E-commerce: A product recommendation engine can check real-time inventory levels to make sure it only suggests items that are actually in stock.

This ability to stay current is what makes AI a truly practical tool for dynamic, real-world work.

All the theory behind Retrieval-Augmented Generation is interesting, but what does it actually do? This is where the technology moves from a cool concept to a real-world problem-solver, creating tools that are not just smart, but genuinely reliable.

Across different industries, companies are starting to use RAG to finally tap into the value locked away in their mountains of documents and data.

Sophisticated Customer Support Chatbots

Customer service is one of the most obvious and powerful use cases. A standard chatbot might get tripped up by a specific question about a new product. At best, it gives a generic reply. At worst, it just makes something up.

A RAG-powered chatbot changes the game. It can access a company's entire knowledge base in real time, pulling from the latest user manuals, technical specs, or help articles to give a precise, up-to-date answer. This instantly elevates a basic bot into a highly effective first line of support. To see how this works for larger companies, check out our guide on chatbot for enterprise solutions.

By connecting an LLM to a constantly updated knowledge base, businesses can provide customers with instant, precise answers drawn from authoritative company sources.

This simple shift solves the nagging problem of outdated or incorrect bot responses, leading to happier customers and taking a significant load off human support agents.

Advanced Research in Specialized Fields

Fields like law and medicine are drowning in data. Professionals depend on enormous, constantly growing libraries of documents, and finding one specific legal precedent or a single relevant medical study can take hours of painstaking manual work.

RAG-powered AI assistants are turning this on its head. These tools can sift through huge digital libraries of case law, research papers, and clinical trial data in seconds.

Here’s how it helps:

- A legal assistant can find and summarize relevant case law for a new client’s situation almost instantly.

- A medical researcher can ask the AI to find every study linking a specific gene to a particular condition and get a list back in moments.

This capability does not just speed up research; it gives professionals the ability to make better-informed decisions, much faster.

Internal Knowledge Management

Big companies often have an internal information problem. Important knowledge is scattered across different departments and formats, buried in PDFs, old presentations, or forgotten wikis. Employees waste valuable time just trying to find the right company policy or technical guide.

A RAG system acts like a single, intelligent search tool for all of that internal knowledge. An employee can just ask a question in plain English, and the system finds the answer, wherever it's hidden. It’s like giving everyone on your team a personal librarian who has read every document the company has ever produced.

The Growing Business Impact of RAG

The rise of Retrieval-Augmented Generation is doing more than just creating ripples in the tech community; it’s making serious economic waves. This is a foundational shift in how companies can tap into their own institutional knowledge. The reason for the hype is simple: businesses need AI that is reliable, accurate, and grounded in fact.

And the investment dollars are following. Market projections show just how big this shift is. The RAG market, currently valued at around USD 1.24 billion, is on track to hit an estimated USD 67.4 billion by 2034. That’s a compound annual growth rate of nearly 49%. To put that in perspective, the U.S. market alone is expected to reach about USD 17.8 billion. You can explore more data on these financial trends to see the full picture.

Turning Data into a Competitive Edge

So, what’s fueling this explosive growth? A problem nearly every company faces: massive, untapped piles of unstructured data. Think about all the valuable information locked away in documents, reports, internal wikis, and chat logs. RAG is the key that unlocks it all, turning a static, dusty archive into a dynamic asset you can actually use.

By putting RAG to work, businesses are building a real competitive advantage. They're creating smarter, fact-based AI tools that lead to better decisions and massively improved customer interactions.

An AI assistant powered by RAG does not just pull answers from a generic knowledge base. It consults your company’s specific, up-to-date information to deliver precise, actionable intelligence. This makes it a strategic investment, not just another piece of tech.

A Strategic Investment for Modern Business

Thinking of RAG as a strategic move helps clarify its true value. It’s about making your entire organization smarter and more responsive by giving both your teams and your customers instant access to the right information, right when they need it.

Just imagine these practical outcomes:

- Sales teams can get instant, accurate product details to answer tricky questions and close deals faster.

- Operations departments can find information on internal processes in seconds instead of hours.

- Customer support agents can resolve issues with confidence, backed by the most current data.

This unique ability to connect AI to proprietary, real-time information is what makes RAG an important tool for any modern business that wants to operate more effectively. It’s no longer a "nice-to-have"; it's becoming a "need-to-have."

Got Questions About RAG?

As you start to get familiar with Retrieval-Augmented Generation, a few common questions always seem to pop up. It’s a new way of thinking about how AI can access and use information, so it’s worth clearing up a few things before you investigate further.

Here are answers to some of the most frequent queries we hear.

Is RAG the Same as Fine-Tuning?

Nope, they're two very different tools for two different jobs.

Fine-tuning is a lot like training a specialist. You take a general-purpose Large Language Model and retrain it on a specific, niche dataset. This process actually changes the model's internal wiring, teaching it a new skill, a unique style, or deep expertise in a narrow field.

RAG, on the other hand, doesn’t change the model at all. Think of it as giving the model an open-book test. It provides the LLM with an external knowledge library it can consult in real-time to find facts and answer questions. While you can use both methods together, RAG is often much faster and more cost-effective for keeping an AI's knowledge fresh and factually accurate.

What Kind of Data Can I Use With a RAG System?

One of the best things about RAG is its flexibility. It works incredibly well with the kinds of unstructured and semi-structured data most businesses already have sitting around in digital filing cabinets.

This makes it perfect for unlocking the value trapped in your existing documents. Common sources include:

- Internal Documents: Things like PDFs, Word docs, and company policy manuals.

- Website Content: Your help center articles, blog posts, or product pages.

- Operational Data: Transcripts from customer support calls or detailed product specs.

The key step is converting all this varied information into a consistent format called embeddings. This process essentially creates a highly efficient, searchable index that the retrieval system can scan in an instant to pinpoint the most relevant information.

What’s Next for RAG Technology?

The future of RAG is all about making the retrieval process smarter and more dynamic. We're moving beyond simple keyword lookups and into what some are calling "agentic RAG." This is where AI agents can perform much more complex reasoning on their own.

Instead of just pulling up a single document, future systems will be able to tackle complex, multi-part questions by synthesizing information from several different sources to build one, comprehensive answer.

We're also seeing a rise in hybrid approaches that combine RAG with other techniques, like fine-tuning, to build even more powerful and adaptable AI systems. As organizations keep generating more data, RAG is set to become a required tool for making that information not just accessible, but truly useful.

Ready to build an AI chatbot trained on your own data? Chatiant makes it easy. Create a custom AI Agent that can look up customer information, book meetings, or serve as a helpdesk assistant directly within your existing workflows. Start your free trial today.