What Is RAG in AI and How It Improves AI Models

Discover what is RAG in AI and how Retrieval-Augmented Generation gives language models real-time knowledge for more accurate and trustworthy answers.

So, what exactly is Retrieval-Augmented Generation (RAG)?

Think of it as giving a super-smart AI a library card. Instead of just relying on the books it has already read (its training data), it can now go out, find the latest information, and use those fresh facts to give you a much better answer.

Unlocking Smarter AI with External Knowledge

A standard Large Language Model (LLM) works from a fixed, internal memory. It only knows what it learned during its initial training. The problem? That knowledge gets stale, fast. This can lead to the AI confidently giving you answers that sound right but are completely wrong, a phenomenon known as "hallucination."

This is precisely where RAG changes the game.

First dreamed up by researchers back in 2020, RAG was designed to solve this very problem. It gives a language model the ability to dynamically pull in relevant, up-to-the-minute information from outside sources like your company's internal documents or a live database before it even starts to craft a response.

This simple, two-step process of retrieving first, then generating, grounds the AI's answer in real, verifiable data. This brings some huge advantages:

- Dramatically Better Accuracy: Answers are built on current, specific data, not just old training information.

- Fewer Hallucinations: By grounding its responses in actual documents, the AI is far less likely to just make things up.

- More Trustworthy: Users can often see the sources the AI used, adding a much-needed layer of transparency to its answers.

RAG essentially turns a generalist AI into an on-demand specialist. It stops guessing and starts looking things up, making sure every answer is timely and contextually spot-on.

To get a clearer picture, let's quickly compare how a standard LLM operates versus one that's been improved with RAG.

Standard LLM vs RAG-Enabled LLM

Ultimately, by bridging the gap between an LLM's static knowledge and live data, RAG makes AI tools far more powerful and reliable for real-world business.

If you're ready to go deeper, check out our complete guide on what RAG is and how you can start building with it.

How the RAG Process Actually Works

When you ask an AI a question using a RAG-powered system, it does not just pull an answer out of thin air. Instead, it kicks off a structured, two-phase process behind the scenes to make sure the response is grounded in actual facts.

The Retrieval Phase

First up is the retrieval phase. The moment you hit "send" on your query, the system’s first job is to search a specific knowledge base. This could be anything from a library of internal company documents to a technical database or any curated set of information.

The system then hunts down the most relevant snippets of text from that source. Think of it like a lightning-fast research assistant who knows exactly which paragraphs in a massive library hold the answer to your question.

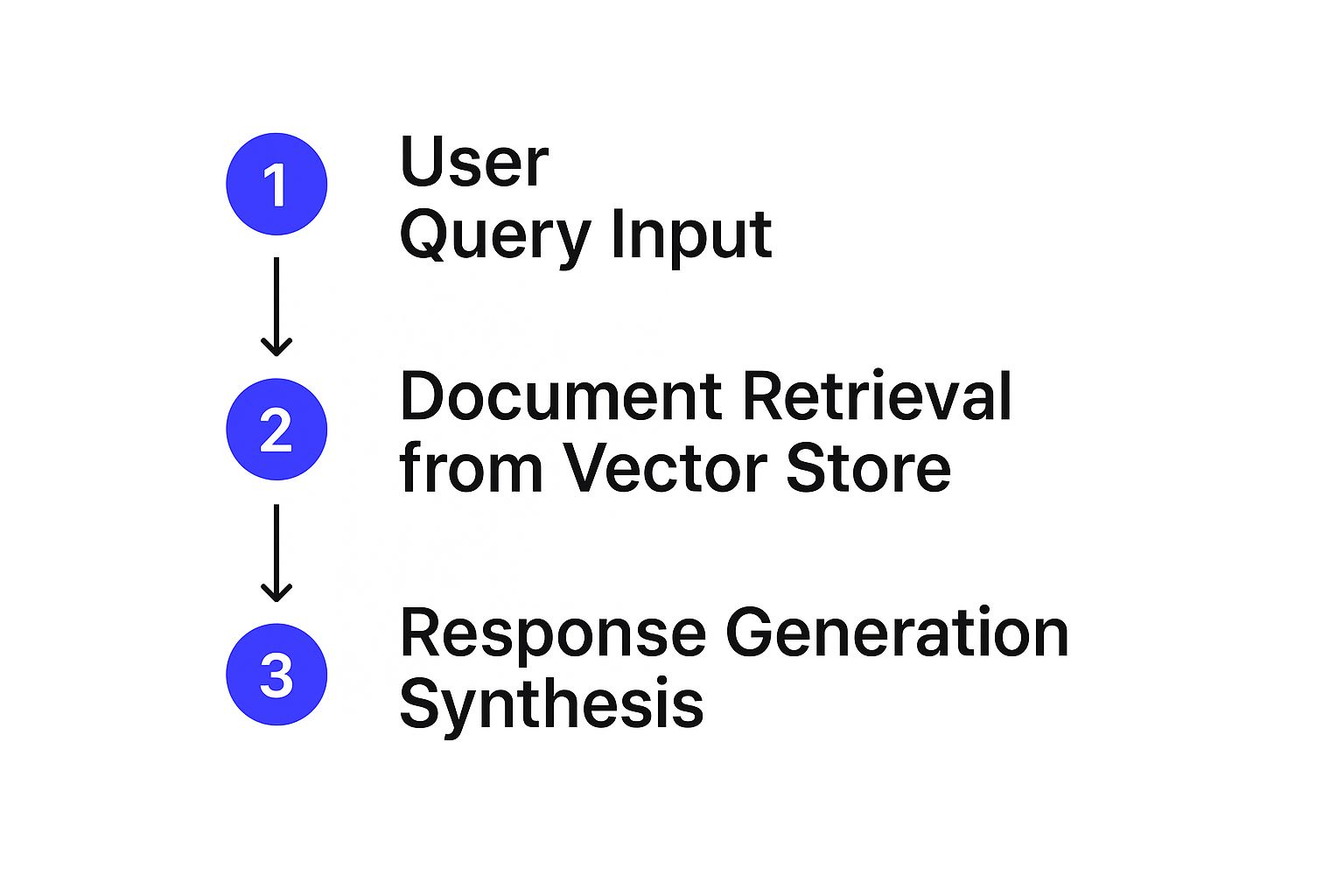

This visual shows that simple, powerful flow from your query to a grounded, AI-generated response.

As the infographic shows, the system fetches relevant data before ever involving the language model. That simple step is the key to getting more accurate answers.

The Generation Phase

Once the system has gathered the most useful bits of information, the generation phase begins. It takes the retrieved context, bundles it with your original question, and sends the whole package over to a Large Language Model (LLM).

Armed with this rich, factual context, the AI now has everything it needs to put together a precise and detailed answer. It crafts a response that’s directly informed by the data it just received, not just its general training.

The core idea is simple: the AI is given an "open book" for its exam. By providing specific, relevant information at the moment of the query, RAG makes sure the final answer is not just plausible but verifiably correct.

This whole approach makes the system more transparent and reliable. You can see a full breakdown of how RAG works to see how each piece contributes to a smarter AI. The result is an AI that can confidently answer specific questions with information that is both current and correct.

The Key Components of a RAG System

A RAG system is a collection of specialists working together to give you smart, fact-based answers. At its core, you have a Large Language Model (LLM), the brain of the operation. This is the sophisticated engine that actually crafts the final, human-like response.

But an LLM on its own is a bit like a brilliant student who has not done the required reading. It needs source material. That’s where the Knowledge Base comes in. This is just a term for whatever documents or data you want the AI to learn from: product manuals, legal contracts, your company’s internal wiki, you name it.

To make all that data useful, the system first has to prepare it for lightning-fast searching. This important step is called indexing.

Indexing and Vector Embeddings

During indexing, the system takes all the raw information from your knowledge base and organizes it for quick and accurate retrieval. It's a two-step dance:

Chunking: First, long documents are broken down into smaller, more manageable pieces, or "chunks." This helps the AI pinpoint the exact piece of information it needs instead of getting lost in a giant wall of text.

Embedding: Next, each of these chunks is converted into a special numerical format called a vector embedding. These numbers capture the semantic meaning of the text, allowing the system to understand concepts and context, not just keywords.

Think of it like creating a super-detailed conceptual map of a library. Instead of just knowing which book a word is in, the map shows you where similar ideas are located, even if they’re described with completely different words.

These vector embeddings are the secret sauce. They let the AI search for meaning, not just matching text. That’s what makes the retrieval so much smarter and more relevant.

All these vector embeddings are then stored in a specialized Vector Database. This is not your average database; it’s built specifically to perform incredibly fast similarity searches across millions of these numerical representations. When you ask a question, the RAG system instantly finds the chunks of text whose embeddings are most similar to your query, giving the LLM the perfect context to craft its answer.

Why RAG Is a Game-Changer for AI

So, what makes a Retrieval-Augmented Generation system such a big deal? For any business using AI, the benefits are immediate and powerful. The biggest win is a massive jump in accuracy. Because RAG grounds its answers in specific, verifiable documents, it nearly eliminates the risk of AI "hallucinations," those moments when a model confidently makes things up.

Another huge advantage is its ability to access information in real time. A standard LLM’s knowledge is basically frozen at the moment it was trained. A RAG system, on the other hand, can be connected to a knowledge base that’s constantly updated. If your company rolls out new product specs or changes an internal policy, the AI can use that information instantly, no retraining required.

Building Trust and Saving Costs

RAG also happens to be a remarkably cost-effective way to keep an AI up to date. Fully retraining a massive language model is an incredibly expensive and slow process. In contrast, just updating a folder of documents in a knowledge base is far cheaper and faster, letting businesses stay nimble.

This entire approach builds a foundation of trust and transparency. Since RAG can literally cite its sources, users can easily click through to the original documents to double-check the AI’s answers. This level of accountability is a major reason why its adoption is accelerating.

By linking generated answers directly back to source documents, RAG gives businesses and users the ability to verify AI outputs, which improves trust and helps with regulatory compliance.

The demand for this kind of verifiable AI is growing fast. Industry forecasts predict a compound annual growth rate of roughly 35% in the adoption of retrieval-augmented AI technologies between 2022 and 2027. This surge is almost entirely driven by the need for greater transparency and accountability in business. You can find more on this trend by exploring industry analysis on what RAG is in AI.

Ultimately, by making AI more accurate, current, and transparent, RAG transforms a generalist model into a reliable, specialist tool you can actually count on.

Real-World Examples of RAG in Action

So, where does Retrieval-Augmented Generation actually make a difference? Let's move past the theory and look at how it's being used in the real world. The real magic happens when RAG connects a powerful, general AI to a specific, private knowledge base, effectively turning it into a specialized expert on demand.

These examples show just how practical RAG can be. By grounding every response in real, verifiable data, the AI becomes a trustworthy tool for tackling complex tasks that used to require a ton of manual effort.

Smarter Customer Support

Think about the last time you dealt with a chatbot. In customer support, a RAG-powered chatbot can access a company's entire library of help docs, product manuals, and troubleshooting guides. This lets it give customers specific, up-to-date answers instead of generic replies.

Customers get detailed instructions pulled straight from the official knowledge base, which means the bot can handle far more complex questions. This frees up human agents to focus on the truly tricky issues that need a personal touch.

Advanced Professional Research

For professionals in fields like law or medicine, a RAG research assistant is a game-changer. It can sift through massive databases of case law or clinical studies in seconds, pulling out relevant precedents and findings, a job that would take a human researcher hours, if not days.

This is where a generalist AI transforms into a specialist with deep, domain-specific knowledge. It delivers immediate, accurate information that professionals can actually trust for important decisions.

This does not just speed up the research process; it helps uncover connections that might have been missed. The AI can summarize its findings and provide direct links back to the source material, making verification a breeze.

Efficient Enterprise Search

Inside a large company, finding information can be a nightmare. A search tool built with RAG can fix that. It lets employees ask natural-language questions and get straight answers sourced from internal wikis, project reports, and shared drives.

This solves the all-too-common problem of important information being buried across dozens of different systems. Employees no longer need to hunt through endless folders. They can just ask, "What was our Q3 revenue last year?" and get a direct, sourced answer right away.

Common Questions About RAG

As you look into what RAG is in AI, a few questions tend to pop up again and again. Getting a handle on these will give you a much clearer picture of where it fits and how it stacks up against other ways of customizing language models.

How Is RAG Different From Fine-Tuning an AI Model?

It’s easy to confuse RAG and fine-tuning, but they solve very different problems.

Fine-tuning actually changes a model’s internal wiring by retraining it on a new, specialized dataset. Think of it like teaching a talented writer a completely new genre or style; you're fundamentally altering how they approach their craft.

RAG, on the other hand, leaves the core model untouched. Instead, it gives the model an external "library" to consult for answers in real time. It's like giving that same writer access to a massive, up-to-date encyclopedia to reference as they write.

- Choose RAG when: You need the AI to pull from information that changes frequently or when you absolutely have to cite the sources for its answers.

- Choose fine-tuning when: You want to change the model's personality, tone, or inherent behavior to better match a specific task, like writing in your company's brand voice.

What Kind of Data Can Be Used in a RAG Knowledge Base?

One of the best things about a RAG system is its flexibility with data. Most of the time, organizations feed it unstructured text from things like PDFs, Word documents, and content scraped from websites.

But it’s not limited to that. RAG can also pull information from structured sources like databases, spreadsheets, or even through APIs. The magic happens when all this data is processed into vector embeddings, which lets the retrieval system search for relevant information no matter what format it started in. This makes RAG a great fit for pretty much any field with a body of knowledge to draw from.

The core strength of RAG is its ability to make almost any collection of information searchable and useful for an LLM, transforming static documents into a dynamic knowledge source.

What Are the Main Challenges of Implementing RAG?

While RAG is powerful, building a solid system does come with a few hurdles. One of the biggest challenges is simply keeping the knowledge base in good shape. A RAG system's output is only as good as the data it retrieves. If your source documents are out of date or just plain wrong, the AI's answers will be too.

Another tricky part is dialing in the retrieval process to make sure it consistently finds the most relevant information for a user's question. Finally, getting the retrieved context to blend seamlessly with the user's original prompt for a smooth, coherent response takes some careful engineering.

You can learn more by exploring other common questions about RAG and their solutions.

Ready to build a smarter, more accurate AI assistant for your business? Chatiant lets you create custom chatbots trained on your own data. Start your free trial today