Natural Language Processing Basics: A Beginner's Guide

Learn the essentials of natural language processing basics. Discover how computers understand human language with simple explanations and real-world examples.

Ever wonder how your phone’s voice assistant actually gets what you’re saying? Or how a spam filter knows exactly which emails to toss into the junk folder? That’s Natural Language Processing (NLP) at work.

Basically, NLP is a field of artificial intelligence that gives computers the ability to read, interpret, and make sense of our messy, everyday human language. This guide will break down the basics of how it all works and show you where it’s already a part of your daily life.

How Computers Actually Understand Human Language

Let’s be honest: human language is complicated. It’s filled with nuance, slang, and context that a machine, which thinks in ones and zeros, just doesn’t naturally get. NLP is the bridge that closes that communication gap. It gives us the tools and methods to translate our unstructured language into a neat, structured format that a computer can finally analyze.

Think of it like teaching a computer a foreign language. You can’t just hand it a dictionary and expect fluency. You have to teach it grammar, sentence structure, idioms, and the subtle meanings behind words.

Take a word like "cool." It could refer to the temperature, or it could be a term of approval. NLP helps a machine figure out the right meaning based on the words surrounding it.

This process is what allows technology to handle all sorts of tasks that used to require a human. You probably use a few of them every single day:

- Spam Filters: They scan your email content to spot and block junk mail before it even hits your inbox.

- Virtual Assistants: This is what powers devices like Siri and Alexa, letting them process your voice commands and dig up relevant answers.

- Machine Translation: It’s how tools like Google Translate can translate text from one language to another almost instantly.

NLP is what lets a computer see language not just as raw data, but with the context we use naturally. It’s the engine running behind the scenes, making modern tech feel more intuitive and genuinely helpful.

In this article, we’ll walk through the foundational concepts of NLP, from its early days to the specific techniques that make it all possible. You’ll see how machines learn to process text, from breaking sentences down into individual words to figuring out the relationships between them. It’s a fascinating field that’s constantly changing how we interact with the digital world.

The Evolution from Simple Rules to Learning Machines

To really get a handle on natural language processing, it helps to rewind the clock. This field didn't just appear overnight. Its journey started way back in the 1950s with a very manual, rule-based approach. Early programmers literally sat down and tried to teach computers the complex grammar of human language, one rule at a time. Imagine trying to write a script for every single exception in English. It was painstaking work.

This approach had its moments, though. Early chatbots like ELIZA, built in the mid-1960s, could mimic conversation by spotting keywords and reflecting them back. While a huge deal for its time, ELIZA didn't actually get a single word. It was a clever illusion that highlighted a major flaw. Human language is just too messy and fluid to be boxed in by a fixed set of instructions.

The Shift to Statistical Learning

By the 1980s and 90s, researchers started thinking differently. Instead of hand-crafting rules, they began feeding computers massive amounts of text and letting them figure out the patterns on their own. This marked the shift to statistical methods and the first real steps into machine learning.

The big breakthrough was realizing a machine could learn that "queen" often shows up near "king" without ever being told about royalty. This data-driven method was far more flexible and could scale in ways the old rule-based systems never could.

This leap from manually coded rules to data-driven learning was the single biggest turning point in NLP history. It laid the groundwork for the powerful, context-aware systems we have today.

The Rise of Modern AI Language Models

That statistical foundation set the stage for modern AI language models, which learn from datasets that are exponentially larger. The core idea is the same, learn from examples, but the scale and sophistication have exploded.

Officially, NLP's roots trace back to the 1950s, intertwined with artificial intelligence and linguistics. A key moment was Alan Turing's 1950 paper, "Computing Machinery and Intelligence," where he proposed the famous Turing Test to see if a machine could exhibit human-like intelligence.

This evolution from rigid rules to flexible, learning systems is what makes today's NLP so powerful. Instead of being stuck with what humans can explicitly program, machines can now get smarter and more accurate with every piece of language data they process.

Breaking Down Language for Machines

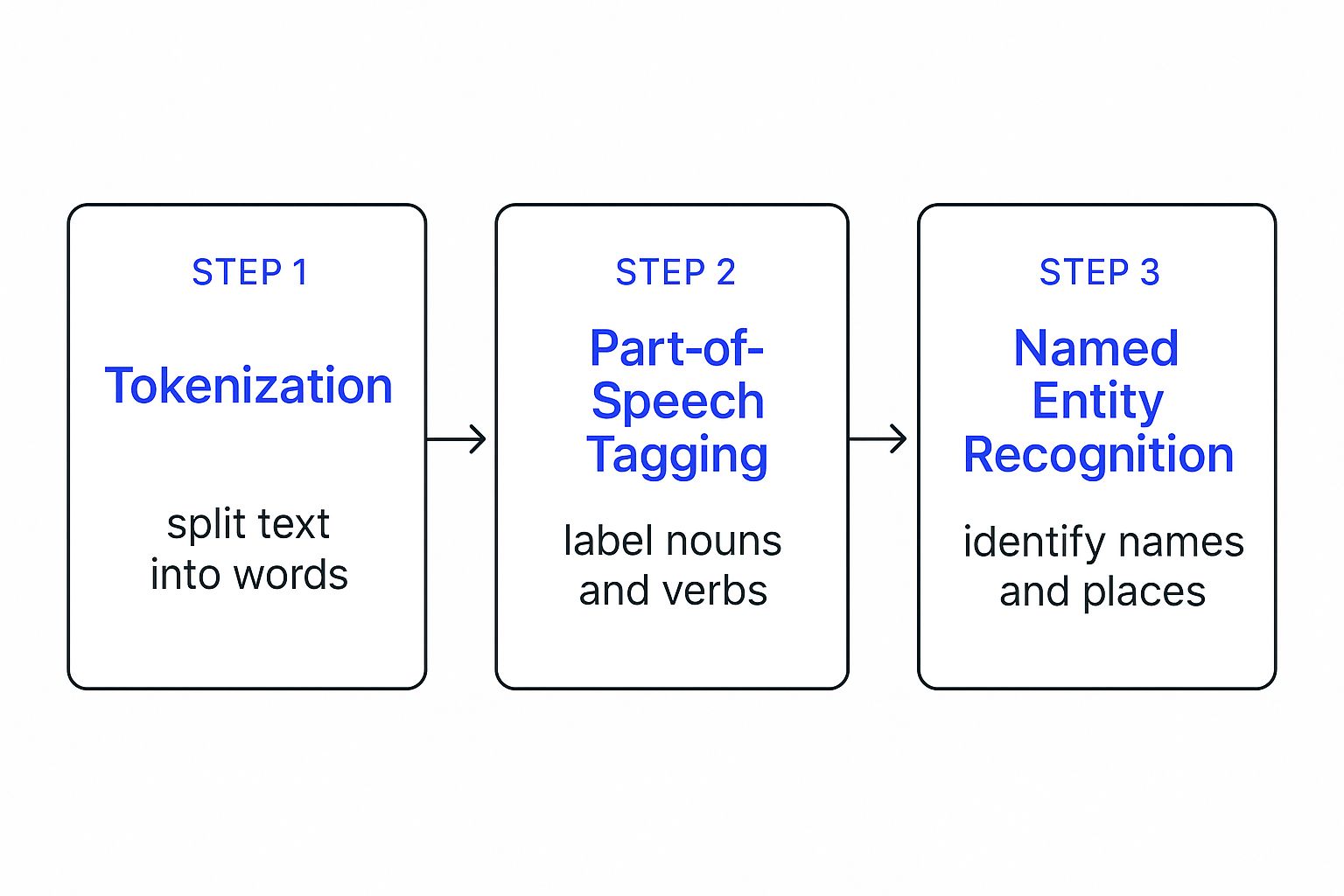

So, how does a computer actually read a sentence? It doesn't see words and meaning the way we do. Instead, it follows a strict, step-by-step process, almost like a detective piecing together clues to solve a case. This sequence is often called an NLP pipeline. This workflow turns messy, everyday human language into structured data a machine can finally get.

This whole process starts with raw text and ends with a meaningful, organized output. To get there, the computer performs a series of specific tasks, with each one building on the last to create a complete picture of the language's meaning. These are the fundamental building blocks of how NLP works.

The infographic below shows the very first, most critical steps in this pipeline, illustrating how a machine starts to deconstruct a simple sentence.

As you can see, the journey from raw text to machine understanding is a logical progression, starting with tiny units and moving to more complex concepts.

Key Nlp Tasks In Action

Let's zoom in on the most common tasks that make up this pipeline. Each one has a distinct job, helping the computer make sense of words, grammar, and context. Without these steps, a machine would only see a jumbled string of characters with no real significance.

For example, the very first step is always to break the text down. Imagine trying to make sense of a long paragraph without knowing where one word ends and the next begins. That’s pretty much what a computer sees before it starts processing.

The core idea behind the NLP pipeline is simple: transform unstructured, chaotic human language into a structured format that algorithms can actually work with. It's a method of creating order from linguistic chaos.

The table below breaks down these foundational tasks. It shows what each step does, why it’s so important, and gives a simple example to make the process crystal clear. Think of it as a roadmap for how a machine learns to read.

Key Tasks in a Typical NLP Workflow

This table outlines the fundamental tasks involved in processing human language, explaining what each step accomplishes.

Each stage provides a new layer of meaning, getting the system one step closer to a human-like interpretation of the text. This systematic approach is central to how all modern NLP systems function.

How Modern NLP Understands Context

While the early statistical methods were a huge improvement over hand-written rules, they had a big weakness. They needed massive amounts of carefully labeled data to work well. The real breakthrough in NLP came when the field shifted to deep learning and neural networks, which taught computers to get language in a much more intuitive, context-aware way.

One of the first major steps in this direction was the idea of word embeddings. This is a technique that turns words into numerical vectors, basically giving each word its own set of coordinates.

You can think of it like placing every single word on a giant, multi-dimensional map. On this map, words with similar meanings are clustered together. "King" would be located near "queen," and "walking" would be a close neighbor to "running." This approach, seen in models like Word2Vec, was a game-changer because it allowed machines to learn the relationships between words on their own.

The Rise of Transformers and LLMs

The next massive leap forward came with a new type of model architecture. Starting in the 2010s, deep learning models and huge datasets pushed NLP forward, but the single most important development was the Transformer architecture in 2017. This new design was brilliant because it could process text in parallel and was far better at capturing long-range connections between words in a sentence. For a good look, check out this paper on the history and impact of large language models.

This architecture paved the way for Large Language Models (LLMs) like OpenAI's GPT series and Google's BERT. These models are pre-trained on staggering volumes of text from the internet, books, and articles, giving them an incredibly broad knowledge of grammar, facts, and even reasoning.

The secret sauce of the Transformer is its attention mechanism. This allows the model to weigh the importance of different words in a sentence when it’s trying to make sense of a specific word. It’s what gives it an incredible ability to track context.

For example, take the sentence, "The delivery truck blocked the bank of the river." The attention mechanism helps the model focus on the word "river" to correctly interpret "bank," effectively ignoring the financial meaning that would be relevant in a different context.

What Makes These New Models Better

So, what really sets these modern models apart from the older systems? It all comes down to a few key advantages that directly address the weaknesses of previous approaches.

- Contextual Understanding: Unlike older word embeddings where "bank" always had the same vector, models like BERT create context-dependent embeddings. The vector for "bank" actually changes depending on whether the sentence is about money or a river.

- Reduced Need for Labeled Data: Because LLMs are pre-trained on so much general text, you can fine-tune them for specific tasks with far less specialized, labeled data than was needed before.

- Advanced Capabilities: These models can do a lot more than just classify text. They can write coherent paragraphs, answer complicated questions, and even perform logical reasoning. To see how these models can be supercharged with external knowledge, check out our guide on Retrieval-Augmented Generation (RAG).

This fundamental shift to deep learning and transformer-based models is the reason today’s NLP tools feel so much more capable and human-like.

It might feel like a complex, behind-the-scenes concept, but you’re actually using natural language processing all the time. This technology is the quiet engine running inside the tools you rely on every day, making them feel smarter and more intuitive.

The technology connects your words to a machine’s actions. It’s the magic that lets your smart speaker play a song or keeps your inbox (mostly) clean. Let's look at a few places you’re already seeing it in action.

From Your Inbox to Your Smart Speaker

One of the oldest and most familiar examples is the spam filter in your email. These systems are constantly performing text classification, a core NLP task. They scan incoming emails, looking at words, phrases, and even sentence structure to predict whether a message is legit or just junk.

Virtual assistants like Siri and Alexa are another perfect example. When you ask, "What's the weather like today?", a whole lot happens in just a few seconds.

First, speech recognition kicks in to turn your voice into text. Then, the system uses intent detection to figure out what you really want, in this case, a weather forecast. These assistants show how multiple NLP tasks work in harmony to create a seamless experience. If you want to learn more about how these systems create such fluid conversations, you can learn more about conversational AI.

The real power of NLP isn't just in knowing individual words, but in piecing together context and intent from messy, everyday human language to perform a specific, useful action.

Machine translation tools like Google Translate also lean heavily on advanced NLP. They use incredibly complex models to analyze a sentence in one language and generate its equivalent in another, capturing grammar and meaning with ever-increasing accuracy. To see how this tech performs in the real world, you can explore some of the best speech-to-text solutions out there today.

Understanding Public Opinion at Scale

It’s not just about personal gadgets. Businesses use NLP to make sense of massive amounts of public feedback. A technique called sentiment analysis scans social media posts, product reviews, and news articles to determine the emotional tone behind the text. Is it positive, negative, or neutral?

This gives companies a powerful way to gauge public opinion on a new product launch or marketing campaign almost instantly. By automatically analyzing thousands of comments, businesses can spot trends and address customer concerns far faster than any human team ever could. Each of these applications shows just how practical it is to teach computers our language.

Why Human Language Is So Tricky for Computers

For all the progress we've seen in AI, human language remains one of the toughest nuts for a computer to crack. Unlike programming languages built on rigid, logical rules, our everyday speech is messy, fluid, and steeped in context that machines just don't get instinctively. This is the core challenge NLP is always trying to solve.

This isn’t just a minor bug. It’s woven into the very fabric of how we communicate. A few key things about our language make it a constant hurdle for even the most powerful algorithms.

The Problem of Ambiguity

One of the biggest headaches for NLP is ambiguity. So many words have multiple meanings, and we humans use context to figure out which one is right without even thinking about it.

Take the word "bank." A computer has to figure out if you’re talking about a financial institution or the side of a river. For a person, the sentence "He tied the boat to the bank" makes the meaning crystal clear. For a machine, it takes some serious analytical heavy lifting to land on the right interpretation.

The real challenge of NLP isn't just teaching computers a dictionary of words. It's teaching them to get the unwritten rules and hidden context that humans know instantly.

This problem gets even worse at the sentence level. The phrase "I saw a man on a hill with a telescope" is a classic example. Who has the telescope? Is it you, or is it the man? Without more information, the meaning is up in the air, creating a real problem for any machine trying to process it literally.

Beyond the Literal Meaning

On top of that, our language is packed with non-literal expressions that can send a machine spinning. These are the things we use every day to add color, emotion, and nuance to what we say.

- Sarcasm and Irony: When someone says, "Oh, great, another meeting," the literal meaning is positive. But we all know the true sentiment is probably the exact opposite. NLP models have to learn to pick up on these subtle, context-driven cues.

- Idioms and Slang: Phrases like "he kicked the bucket" or calling something "sick" have meanings that are completely disconnected from their individual words. Language is also always changing, with new slang popping up constantly. This forces NLP models into a perpetual state of catch-up.

These difficult points are exactly why getting a handle on the natural language processing basics is so important. They show the incredible work that goes into making our interactions with technology feel even remotely natural.

Frequently Asked Questions About NLP

Now that we’ve walked through the basics of natural language processing, a few common questions usually pop up. Let's clear up some key distinctions and point you in the right direction if you're ready to learn more.

What Is The Difference Between NLP and NLU?

It helps to think of Natural Language Understanding (NLU) as a specialized part of the bigger NLP picture. While NLP covers the entire process of turning messy human language into structured data (like tokenization and parsing), NLU zeroes in on one specific goal: figuring out the meaning and intent.

For example, NLP is what breaks a sentence down into its component parts. NLU is what determines if the user is actually asking a question, barking a command, or just making a casual statement. It’s the comprehension piece of the puzzle.

What Skills Are Needed for NLP?

Jumping into the NLP field usually requires a mix of different skills. A solid foundation in programming, especially with Python, is a huge help. You’ll also want a good handle on machine learning concepts and statistics, since that’s what powers the models you'll be building and training.

But honestly, the most valuable skill is just plain old curiosity. The ability to ask smart questions about language and data is what really drives innovation and helps crack the toughest problems in this space.

If you want to go deeper into specific NLP topics, you can explore the Parakeet AI blog for further insights, which is a great resource for new developments and real-world examples.

Ready to put NLP to work for your business? Chatiant lets you build custom AI chatbots trained on your own data. Create an AI agent that can book meetings, look up customer information, or act as a Helpdesk assistant right inside Google Chat or on your website. Learn more at https://www.chatiant.com.